Computer use agents, or CUAs, are an AI technology that hit the mainstream in January 2025, when OpenAI announced the tech preview of Operator. CUAs have incredible potential to open up new use cases for AI, because they give AIs full access to applications and tools that run on desktop environments. For example, CUAs allow the AI to open a real browser, visit any website, scan it for data, and even submit forms.

When we dug into CUAs at Pearl, the first questions we asked were:

- What problems can CUAs help us solve?

- How can we use them safely?

We were especially intrigued by using CUAs to shorten the work of EHR integrations.

EHR integrations are complex for Pearl, because we partner with independent practices across the country. We don’t restrict them to any specific EHR vendor. Instead, we meet them where they’re at, supporting whichever technologies they’ve chosen. But that comes with challenges when we want to extract EHR data. Each vendor has their own API, and there’s no way to build a “one size fits all” integration. Traditionally, companies solve this by writing code for a wide variety of vendors and configurations.

CUAs presented a tantalizing scenario: what if we could sidestep the need for one-by-one integrations? Instead, we’d leverage LLMs. We’d create prompts to express what tasks we need the AI to complete, point it at the EHR login page, and let it figure out the rest! It would sign in, examine the user interface, and learn how to satisfy our requests.

But before we rolled up our sleeves and started prompting, we knew we had more work to do. We had to responsibly address the topics of patient data safety and HIPAA compliance.

How CUAs Work

- The LLM1, which runs in the cloud (e.g. GPT-4), or on hosted infrastructure (e.g. Llama or Qwen).

- The agent, which runs on a desktop environment and communicates with the LLM. These agents are usually open-source.

- A set of “tools” that are chosen by the CUA developer, and allow the agent to interact with the desktop. The tool could be a specific application, like Microsoft Excel or Google Chrome, or it could be mouse and keyboard control of the entire machine.

- A system prompt that tells the LLM which tools are available and how to use them.

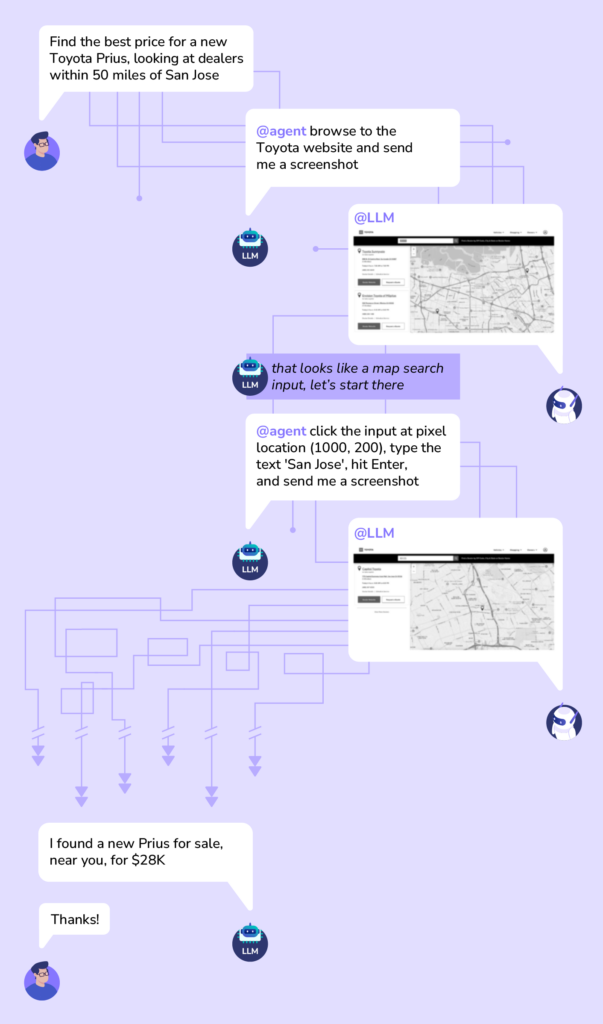

- User prompts the LLM. For example, “Find the best price for a new Toyota Prius, looking at dealers within 50 miles of San Jose.”

- The LLM reviews its list of tools, decides that Google Chrome is the best app to use, and that toyota.com is the best website to visit.

- The LLM tells the agent, “Open the Chrome app, browse to toyota.com, and send me a screenshot.”

- The agent, running on a desktop, follows these instructions, and uploads the screenshot to the cloud.

- The LLM reviews the screenshot using its image-processing capabilities, and finds the bold text ‘Shopping’.

- This appears to be a link, so it tells the agent, “Use the mouse to click on pixel coordinates 2190,200, then send me another screenshot.”

- The agent follows these instructions.

- The LLM reviews the screenshot, and sees a Zip Code input.

- San Jose has many Zip codes, so the LLM randomly chooses 95125. It tells the agent, “Use the keyboard to type the characters ‘95125’, then hit Enter, then send me another screenshot.”

CUAs, HIPAA, and Data Safety

Now that we understand how CUAs work, we can look at how each part relates to HIPAA and data safety.

There are HIPAA-compliant versions of the APIs for both Claude and OpenAI. But what does that actually mean? If you sign a BAA with Anthropic, does that mean Claude Computer Use is HIPAA-compliant? The answer is complex, and depends on which part of the CUA we’re referring to.

In short, the BAA only covers data that’s consumed directly by the LLM. Specifically, that’s:

- All of your prompts

- Any data that the agent uploads to the LLM

The agent itself, and the tools that you provide to the agent, are not covered.

This makes sense from a practical standpoint. The AI vendor doesn’t know which agent you’re using. It could be an open-source library, running on infrastructure that the AI vendor doesn’t control. There’s no way for them to ensure that these parts are HIPAA-compliant.

More importantly, however, the BAA doesn’t govern two very important things:

- The BAA doesn’t guarantee that users will leverage the AI for tasks that are HIPAA-compliant.

- The BAA doesn’t guarantee that the AI will choose to carry out those tasks in a HIPAA-compliant manner.

Let’s unpack these a bit.

Here’s an example of a prompt that would be silly: “Log into Epic, extract patient John Smith’s latest records, then write a poem about them and post it on Threads.” This would obviously be a HIPAA violation. But here’s how it looks from the AI vendor’s perspective:

- The prompt is received by the AI.

- The AI instructs the agent to browse through Epic’s interface. Along the way, the agent sends several screenshots to the AI.

- The AI extracts John’s data from those screenshots, and creates a poem.

- The AI instructs the agent to browse to the Threads website and post the poem.

From the vendor’s perspective, this would be fully compliant with the BAA! That’s because the BAA only applies to the data that the AI received: the initial prompt, and the Epic screenshots. The AI vendor will protect that data in compliance with HIPAA. But the outputs of the AI — the instructions that it sends to the agent — are not covered by the BAA.

Of course, that example was kind of ridiculous. You’re probably asking, “If I don’t write silly prompts, then I’m covered by the BAA, right?” And the answer is, “Not quite.” You see, the bigger problem is that AIs can make mistakes.

Here’s a more realistic prompt: “Log into Epic, extract patient John Smith’s latest records, and send them to his cardiologist, Dr. Chen.” This looks fine, right? So what could go wrong?

First, we have to remember that LLMs are stochastic, which is a fancy way of saying that you can’t always predict what they’ll do. Just because you get a certain result today, that doesn’t mean you’ll get the same result tomorrow. In fact, in the extreme case, the AI really could decide to upload John’s data to social media! This is unlikely, but would still be compliant according to the BAA.

The more likely scenario is that the AI will make innocent mistakes. For example: what if the AI can’t find Dr. Chen’s contact information in the EHR? Well, it could decide that the “next best thing” is to search Google. Now you’re at the mercy of search results to determine whether John’s PHI goes to the right person. You could wind up with a PHI exposure, even though the LLM is HIPAA-compliant.

Now, you may be wondering whether these are temporary concerns. Will the AI vendors build a platform that solves these problems? Could we reach a point where they’ll sign a BAA that covers every aspect of a CUA system? Well, if they did, their approach to HIPAA would have to go far beyond just data management and data security. They’d have to train the AI to make HIPAA-compliant decisions. This would be extremely challenging, because the rules for sharing patient data depend on so many things! It’s all about context. Even humans have trouble getting it right. Here are some of the questions the AI would need to answer:

- Who am I sharing the data with? Is that specific person allowed to receive it?

- What specific type of data am I sharing, and is it in scope for the BAA?

- Why am I sharing this data? Is it for an acceptable reason?

Even if they could solve all of these problems, it’s hard to imagine them signing BAAs that take liability for getting it right every time.

Using CUAs Safely

- We’re waiting until CUA tools are out of “tech preview” and more mature.

- We’ll only use HIPAA-compliant AI models, with signed BAAs in place.

- We’ll run agents on secure, trusted infrastructure that meets our compliance standards.

- We’ll limit what CUAs can do, restricting access to read-only accounts and blocking non-essential internet access.

- We’ll start with low-risk use cases, where human oversight is easy and the impact of a mistake is small.

What’s Next?

CUAs represent a leap forward in how AI can help people do meaningful, complex work. At Pearl Health, we’re exploring how this technology might one day help providers spend less time on systems and more time with patients. But we’re also committed to keeping privacy, safety, and trust at the core of everything we do.

Stay tuned as we continue to evaluate new tools — and share what we learn along the way.

- Technically, CUAs require a multi-modal AI that can process both images and text, while LLMs are strictly text-only. But the term “LLM” is increasingly applied to both types of AI.